Using OpenAI to increase time spent on your blog

Today we're going to learn how to build a recommendation system for a social publishing platform like medium.com. The whole project's source code we are going to build is available here (opens in a new tab). You can also try an interactive version here (opens in a new tab).

You can also deploy the end version on Vercel right now:

Overview

We're going to cover a few things here, but the big picture is that:

- We will need to turn our blog posts into a numerical representation called "embeddings" and store it in a database.

- We will get the most similar blog posts to the one currently being read

- We will display these similar blog posts on the side

Concretely, it only consists in:

embedbase.dataset('recsys').batchAdd('<my blog posts>')embedbase.dataset('recsys').search('<my blog post content>')

Here, "search" allows you to get recommendations.

Yay! Two lines of code to implement the core of a recommendation system.

Diving into the implementation

As a reminder, we will create a recommendation engine for your publishing platform we will be using NextJS and tailwindcss. We will also use Embedbase (opens in a new tab) as a database. Other libraries used:

gray-matterto parse Markdown front-matter (used to store document metadata, useful when you get the recommended results)swrto easily fetch data from NextJS API endpointsheroiconsfor icons- Last,

react-markdownto display nice Markdown to the user

Alright let's get started 🚀

Here's what you'll be needing for this tutorial

- Embedbase api key (opens in a new tab), a database that allows you to find "most similar results". Not all databases are suited for this kind of job. Today we'll be using Embedbase which allows you to do just that. Embedbase allows you to find "semantic similarity" between a search query and stored content.

You can now clone the repository like so:

git clone https://github.com/different-ai/embedbase-recommendation-engine-exampleOpen it with your favourite IDE, and install the dependencies:

npm iNow you should be able to run the project:

npm run devWrite the Embedbase API key you just created in .env.local:

EMBEDBASE_API_KEY="<YOUR KEY>"Creating a few blog posts

As you can see the _posts folder contains a few blog posts, with some front-matter yaml metadata that give additional information about the file.

ℹ️ Disclaimer: The blog posts included inside of the repo you just downloaded were generated by GPT-4

Preparing and storing the documents

The first step requires us to store our blog posts in Embedbase.

To read the blog posts we've just written, we will need to implement a small piece of code to parse the Markdown front-matter and store it in documents metadata, it will improve the recommendation experience with additional information. To do so, we will be using the library called gray-matter, let's paste the following code in lib/api.ts:

import fs from 'fs'

import { join } from 'path'

import matter from 'gray-matter'

// Get the absolute path to the posts directory

const postsDirectory = join(process.cwd(), '_posts')

export function getPostBySlug(slug: string, fields: string[] = []) {

const realSlug = slug.replace(/\.md$/, '')

// Get the absolute path to the markdown file

const fullPath = join(postsDirectory, `${realSlug}.md`)

// Read the markdown file as a string

const fileContents = fs.readFileSync(fullPath, 'utf8')

// Use gray-matter to parse the post metadata section

const { data, content } = matter(fileContents)

type Items = {

[key: string]: string

}

const items: Items = {}

// Store each field in the items object

fields.forEach((field) => {

if (field === 'slug') {

items[field] = realSlug

}

if (field === 'content') {

items[field] = content

}

if (typeof data[field] !== 'undefined') {

items[field] = data[field]

}

})

return items

}Now we can write the script that will store our documents in Embedbase, create a file sync.ts in the folder scripts.

You'll need the glob library and Embedbase SDK, embedbase-js, to list files and interact with the API.

In Embedbase, the concept of dataset represents one of your data sources, for example, the food you eat, your shopping list, customer feedback, or product reviews.

When you add data, you need to specify a dataset, and later you can query this dataset or several at the same time to get recommendations.

Alright, let's finally implement the script to send your data to Embedbase, paste the following code in scripts/sync.ts:

import glob from "glob";

import { splitText, createClient, BatchAddDocument } from 'embedbase-js'

import { getPostBySlug } from "../lib/api";

try {

// load the .env.local file to get the api key

require("dotenv").config({ path: ".env.local" });

} catch (e) {

console.log("No .env file found" + e);

}

// you can find the api key at https://app.embedbase.xyz

const apiKey = process.env.EMBEDBASE_API_KEY;

// this is using the hosted instance

const url = 'https://api.embedbase.xyz'

const embedbase = createClient(url, apiKey)

const batch = async (myList: any[], fn: (chunk: any[]) => Promise<any>) => {

const batchSize = 100;

// add to embedbase by batches of size 100

return Promise.all(

myList.reduce((acc: BatchAddDocument[][], chunk, i) => {

if (i % batchSize === 0) {

acc.push(myList.slice(i, i + batchSize));

}

return acc;

// here we are using the batchAdd method to send the documents to embedbase

}, []).map(fn)

)

}

const sync = async () => {

const pathToPost = (path: string) => {

// We will use the function we created in the previous step

// to parse the post content and metadata

const post = getPostBySlug(path.split("/").slice(-1)[0], [

'title',

'date',

'slug',

'excerpt',

'content'

])

return {

data: post.content,

metadata: {

path: post.slug,

title: post.title,

date: post.date,

excerpt: post.excerpt,

}

}

};

// read all files under _posts/* with .md extension

const documents = glob.sync("_posts/**/*.md").map(pathToPost);

// using chunks is useful to send batches of documents to embedbase

// this is useful when you send a lot of data

const chunks = []

await Promise.all(documents.map((document) =>

splitText(document.data).map(({ chunk, start, end }) => chunks.push({

data: chunk,

metadata: document.metadata,

})))

)

const datasetId = `recsys`

console.log(`Syncing to ${datasetId} ${chunks.length} documents`);

// add to embedbase by batches of size 100

return batch(chunks, (chunk) => embedbase.dataset(datasetId).batchAdd(chunk))

.then((e) => e.flat())

.then((e) => console.log(`Synced ${e.length} documents to ${datasetId}`, e))

.catch(console.error);

}

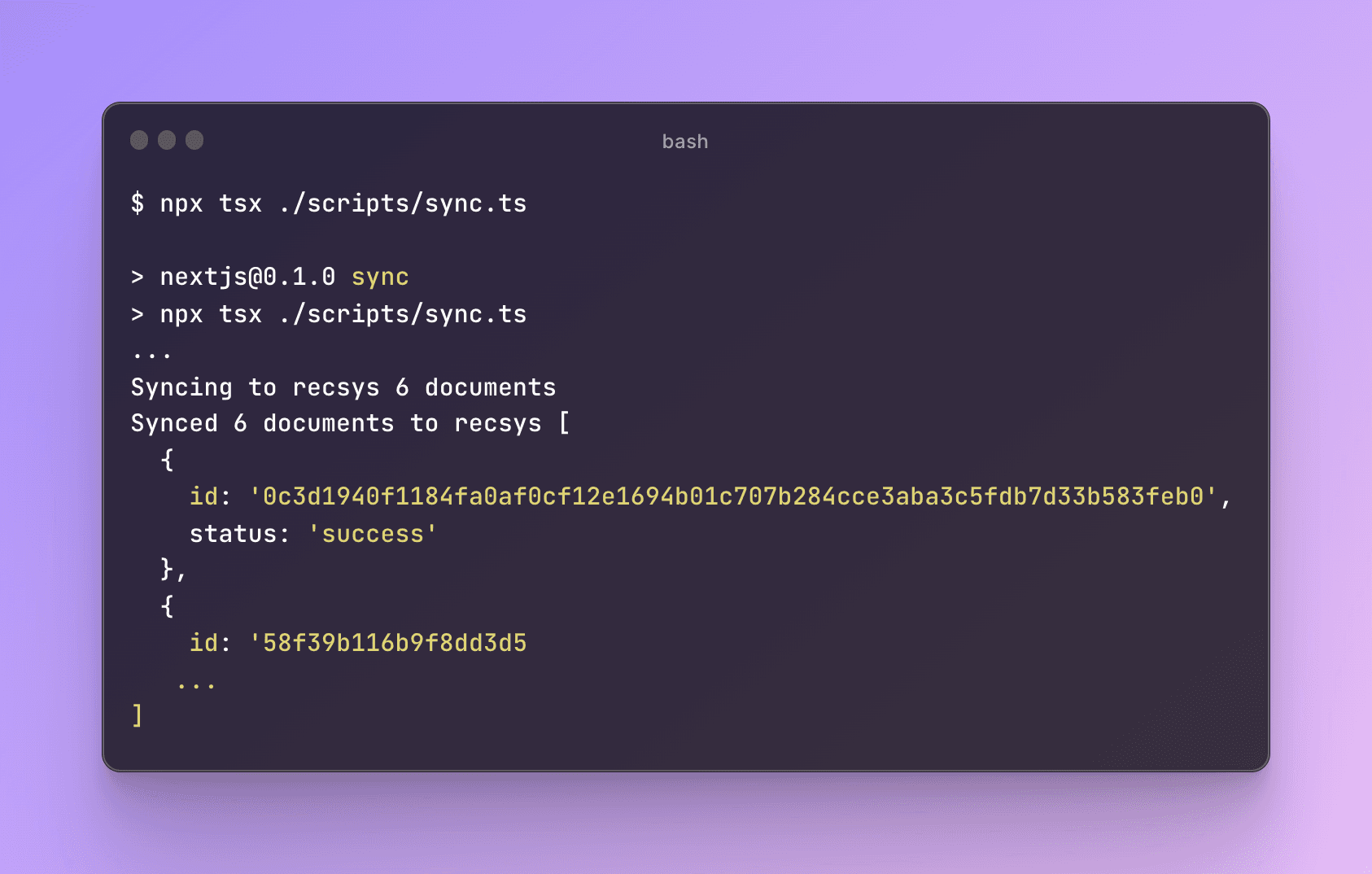

sync();Great, you can run it now:

npx tsx ./scripts/sync.tsThis is what you should be seeing:

Protip: you can even visualise your data now in Embedbase dashboard (which is open-source (opens in a new tab)), here (opens in a new tab):

Or ask questions about it using ChatGPT here (opens in a new tab) (make sure to tick the "recsys" dataset):

Implementing the recommendation function

Now, we want to be able to get recommendations for our blog posts, we will add an API endpoint (if you are unfamiliar with NextJS API pages, check out this (opens in a new tab)) in pages/api/recommend.ts:

import { createClient } from "embedbase-js";

// Let's create an Embedbase client with our API key

const embedbase = createClient("https://api.embedbase.xyz", process.env.EMBEDBASE_API_KEY);

export default async function recommend (req, res) {

const query = req.body.query;

if (!query) {

res.status(400).json({ error: "Missing query" });

return;

}

const datasetId = "recsys";

// in this case even if we call the function search,

// we actually get recommendations

let results = await embedbase.dataset(datasetId).search(query, {

// We want to get the first 4 results

limit: 4,

});

res.status(200).json(results);

}Building the blog interface

All we have to do now is connect it all in a friendly user interface and we're done!

Components

As a reminder, we are using tailwindcss for the styling which allows you to "Rapidly build modern websites without ever leaving your HTML":

interface BlogPost {

id: string

title: string

href: string

date: string

snippet: string

}

interface BlogSectionProps {

posts: BlogPost[]

}

export default function Example({ posts }: BlogSectionProps) {

return (

<div className="bg-white py-24 sm:py-32">

<div className="mx-auto max-w-7xl px-6 lg:px-8">

<div className="mx-auto max-w-2xl">

<h2 className="text-3xl font-bold tracking-tight text-gray-900 sm:text-4xl">From the blog</h2>

<div className="mt-10 space-y-16 border-t border-gray-200 pt-10 sm:mt-16 sm:pt-16">

{posts.length === 0 &&

<div role="status" className="max-w-md p-4 space-y-4 border border-gray-200 divide-y divide-gray-200 rounded shadow animate-pulse dark:divide-gray-700 md:p-6 dark:border-gray-700">

<div className="flex items-center justify-between">

<div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-600 w-24 mb-2.5"></div>

<div className="w-32 h-2 bg-gray-200 rounded-full dark:bg-gray-700"></div>

</div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-700 w-12"></div>

</div>

<div className="flex items-center justify-between pt-4">

<div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-600 w-24 mb-2.5"></div>

<div className="w-32 h-2 bg-gray-200 rounded-full dark:bg-gray-700"></div>

</div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-700 w-12"></div>

</div>

<div className="flex items-center justify-between pt-4">

<div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-600 w-24 mb-2.5"></div>

<div className="w-32 h-2 bg-gray-200 rounded-full dark:bg-gray-700"></div>

</div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-700 w-12"></div>

</div>

<div className="flex items-center justify-between pt-4">

<div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-600 w-24 mb-2.5"></div>

<div className="w-32 h-2 bg-gray-200 rounded-full dark:bg-gray-700"></div>

</div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-700 w-12"></div>

</div>

<div className="flex items-center justify-between pt-4">

<div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-600 w-24 mb-2.5"></div>

<div className="w-32 h-2 bg-gray-200 rounded-full dark:bg-gray-700"></div>

</div>

<div className="h-2.5 bg-gray-300 rounded-full dark:bg-gray-700 w-12"></div>

</div>

<span className="sr-only">Loading...</span>

</div>

}

{posts.map((post) => (

<article key={post.id} className="flex max-w-xl flex-col items-start justify-between">

<div className="flex items-center gap-x-4 text-xs">

<time dateTime={post.date} className="text-gray-500">

{post.date}

</time>

</div>

<div className="group relative">

<h3 className="mt-3 text-lg font-semibold leading-6 text-gray-900 group-hover:text-gray-600">

<a href={post.href}>

<span className="absolute inset-0" />

{post.title}

</a>

</h3>

<p className="mt-5 line-clamp-3 text-sm leading-6 text-gray-600">{post.snippet}</p>

</div>

</article>

))}

</div>

</div>

</div>

</div>

)

}import Markdown from './Markdown';

interface ContentSectionProps {

title: string

content: string

}

export default function ContentSection({ title, content }: ContentSectionProps) {

return (

<div className="bg-white px-6 py-32 lg:px-8 prose lg:prose-xl">

<div className="mx-auto max-w-3xl text-base leading-7 text-gray-700">

<h1 className="mt-2 text-3xl font-bold tracking-tight text-gray-900 sm:text-4xl">{title}</h1>

<Markdown>{content}</Markdown>

</div>

</div>

)

}import ReactMarkdown from "react-markdown";

import { PrismLight as SyntaxHighlighter } from "react-syntax-highlighter";

import tsx from "react-syntax-highlighter/dist/cjs/languages/prism/tsx";

import typescript from "react-syntax-highlighter/dist/cjs/languages/prism/typescript";

import scss from "react-syntax-highlighter/dist/cjs/languages/prism/scss";

import bash from "react-syntax-highlighter/dist/cjs/languages/prism/bash";

import markdown from "react-syntax-highlighter/dist/cjs/languages/prism/markdown";

import json from "react-syntax-highlighter/dist/cjs/languages/prism/json";

SyntaxHighlighter.registerLanguage("tsx", tsx);

SyntaxHighlighter.registerLanguage("typescript", typescript);

SyntaxHighlighter.registerLanguage("scss", scss);

SyntaxHighlighter.registerLanguage("bash", bash);

SyntaxHighlighter.registerLanguage("markdown", markdown);

SyntaxHighlighter.registerLanguage("json", json);

import rangeParser from "parse-numeric-range";

import { oneDark } from "react-syntax-highlighter/dist/cjs/styles/prism";

const Markdown = ({ children }) => {

const syntaxTheme = oneDark;

const MarkdownComponents: object = {

code({ node, inline, className, ...props }) {

const hasLang = /language-(\w+)/.exec(className || "");

const hasMeta = node?.data?.meta;

const applyHighlights: object = (applyHighlights: number) => {

if (hasMeta) {

const RE = /{([\d,-]+)}/;

const metadata = node.data.meta?.replace(/\s/g, "");

const strlineNumbers = RE?.test(metadata)

? RE?.exec(metadata)[1]

: "0";

const highlightLines = rangeParser(strlineNumbers);

const highlight = highlightLines;

const data: string = highlight.includes(applyHighlights)

? "highlight"

: null;

return { data };

} else {

return {};

}

};

return hasLang ? (

<SyntaxHighlighter

style={syntaxTheme}

language={hasLang[1]}

PreTag="div"

className="codeStyle"

showLineNumbers={true}

wrapLines={hasMeta}

useInlineStyles={true}

lineProps={applyHighlights}

>

{props.children}

</SyntaxHighlighter>

) : (

<code className={className} {...props} />

);

},

};

return (

<ReactMarkdown components={MarkdownComponents}>{children}</ReactMarkdown>

);

};

export default Markdown;Pages

When it comes to pages, we'll tweak pages/index.tsx to redirect to the first blog post page:

import Head from 'next/head'

import { useRouter } from 'next/router'

import { useEffect } from 'react'

export default function Home() {

const router = useRouter()

useEffect(() => {

router.push('/posts/understanding-machine-learning-embeddings')

}, [router])

return (

<>

<Head>

<title>Embedbase recommendation engine</title>

<meta name="description" content="Embedbase recommendation engine" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<link rel="icon" href="/favicon.ico" />

</Head>

</>

)

}And create this post page that will use the components we previously built in addition to the recommend api endpoint:

import useSWR, { Fetcher } from "swr";

import BlogSection from "../../components/BlogSection";

import ContentSection from "../../components/ContentSection";

import { ClientSearchData } from "embedbase-js";

import { getPostBySlug } from "../../lib/api";

export default function Index({ post }) {

const fetcherSearch: Fetcher<ClientSearchData, string> = () => fetch('/api/recommend', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ query: post.content }),

}).then((res) => res.json());

const { data: similarities, error: errorSearch } =

useSWR('/api/search', fetcherSearch);

console.log("similarities", similarities);

return (

<main className='flex flex-col md:flex-row'>

<div className='flex-1'>

<ContentSection title={post.title} content={post.content} />

</div>

<aside className='md:w-1/3 md:ml-8'>

<BlogSection posts={similarities

// @ts-ignore

?.filter((result) => result.metadata.path !== post.slug)

.map((similarity) => ({

id: similarity.hash,

// @ts-ignore

title: similarity.metadata?.title,

// @ts-ignore

href: similarity.metadata?.path,

// @ts-ignore

date: similarity.metadata?.date.split('T')[0],

// @ts-ignore

snippet: similarity.metadata?.excerpt,

})) || []} />

</aside>

</main>

)

}

export const getServerSideProps = async (ctx) => {

const { post: postPath } = ctx.params

const post = getPostBySlug(postPath, [

'title',

'date',

'slug',

'excerpt',

'content'

])

return {

props: {

post: post,

},

}

}Head to the browser to see the results (http://localhost:3000 (opens in a new tab)) You should see this:

Of course, feel free to tweak the style 😁.

Closing thoughts

In summary, we have:

- Created a few blog posts

- Prepared and stored our blog posts in Embedbase

- Created the recommendation engine in a few lines of code

- Built an interface to display blog posts and their recommendations

Thank you for reading this blog post, you can find the complete code on the "complete" branch of the repository (opens in a new tab)

If you liked this blog post, leave a star ⭐️ on https://github.com/different-ai/embedbase (opens in a new tab), if you have any feedback, issues are highly appreciated ❤️ (opens in a new tab). If you want to self-host it, please book a demo (opens in a new tab) 😁. Please feel free to contact us for any help or join the Discord community (opens in a new tab) 🔥.